The first step in operationalizing an AI governance program is understanding your AI environment. This enables you to guide responsible adoption and conduct risk assessments in line with your ethical and compliance standards. We'll help you inventory, audit, and assess your AI to support successful business outcomes.

Understand your AI footprint

To stay competitive, AI innovation is essential, but adopting the technology comes with risks. OneTrust AI Governance helps ensure you’re aware of all artificial intelligence usage, development, and procurement to effectively manage risks and build trust with stakeholders.

Responsibly adopt new technology with an AI audit

Mature your AI governance program

Enable Innovation

Navigate AI risk

Meet evolving regulatory requirements

Streamline AI governance

Automatically discover AI usage to control costs and ensure governance

While an AI audit, or inventory, is essential to your overall AI governance program, it can also be an effective cost management tool. Evaluating risk levels prior to making a new investment in AI can save your organization time and money if the technology risk is deemed high or unacceptable.

- Discover key artifact file types associated with AI and ML technologies

- Use intake assessments to capture AI project context before the project kicks off

- Access pre-built templates to capture context necessary to meet requirements of the EU AI Act, RMF, OECD, and more

Maintain an accurate AI inventory to identify risks and demonstrate compliance

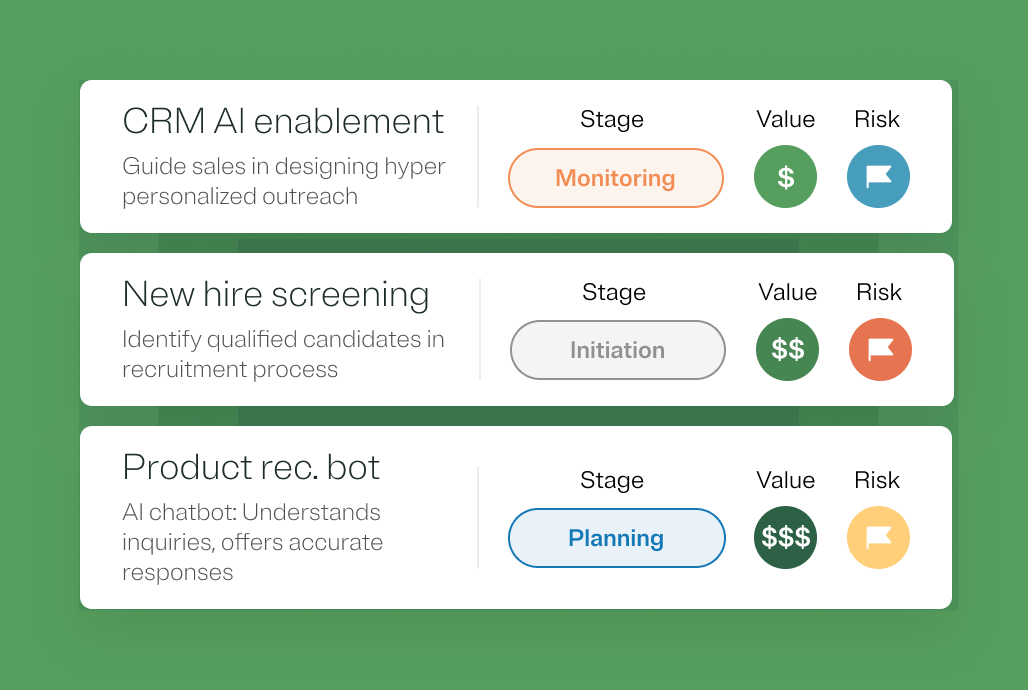

Risks vary based on context and regulatory requirements. Dynamically tracking AI models, projects, and training data sets is critical in helping your organization balance risk with reward and meet obligations of key regulations like the EU AI Act.

- Track distinct AI initiatives to establish relationships with models and data sets

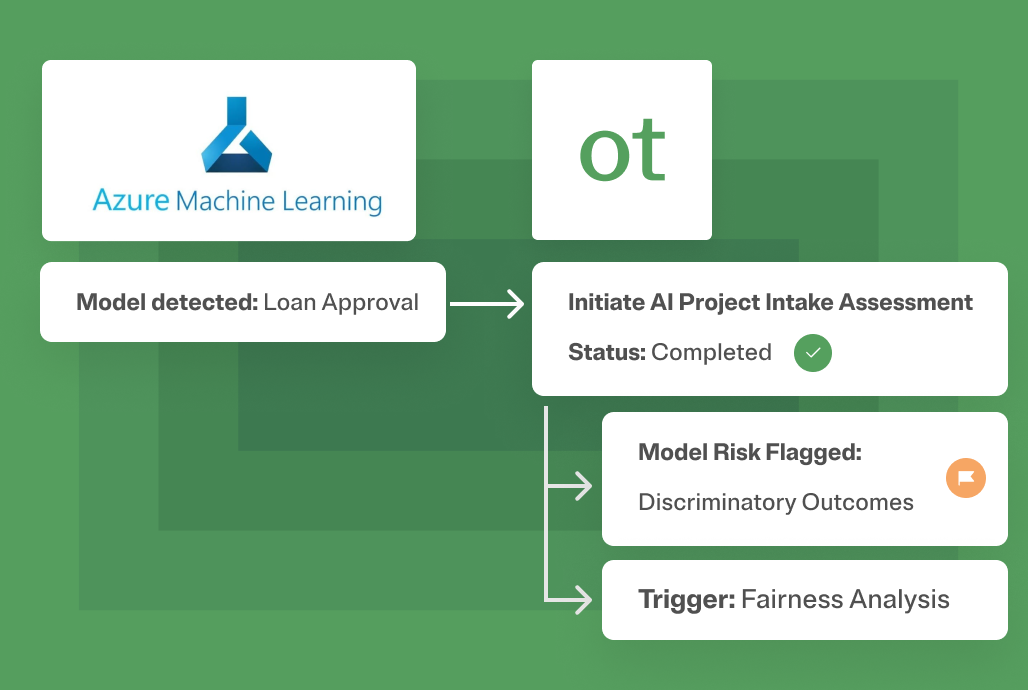

- Use ML Ops integrations to auto-detect AI models and sync with your inventory

- Track the datasets used to train models and surface insights regarding the type of data

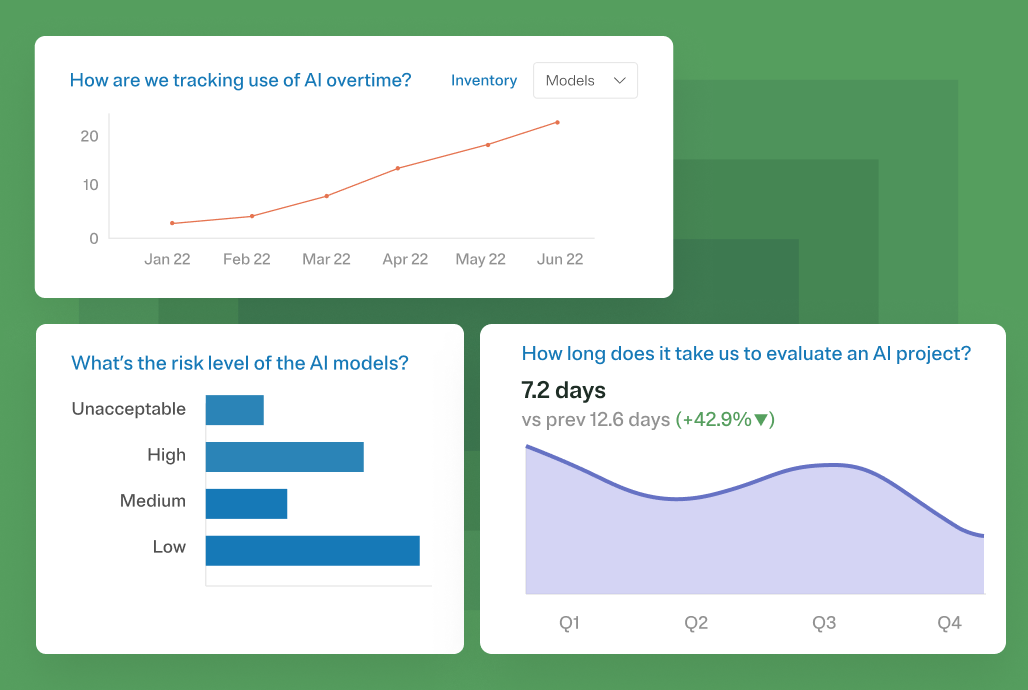

Use dynamic dashboards for transparent reporting

Creating an AI inventory is one thing, but summarizing key insights for continuous improvement is another. Our dashboards make it easy to aggregate real-time data into meaningful visuals that promote transparency and accountability.

- Easily identify model risk levels to inform business decision-making

- Quickly understand the number of high-risk AI projects in production

- Share key insights with your audit teams or other key stakeholders

Learn more about how to understand your AI footprint

Explore our resources below, or request a demo, to learn more about how we can support your journey to responsible AI adoption.

FAQ

We understand that risk management and AI governance are only effective if you have a full picture of your machine learning and AI applications. Our integration ecosystem supports auto-discovery and continuous monitoring across your IT assets. Our solution can automatically trigger AI project assessments directly across common project management tools like Jira, discovers common AI file types stored across the cloud and on-prem environments, and,supports automated model registration with common ML Ops tools like Azure ML, Google Vertex, and AWS Sagemaker, or Databrick MLflow.

Using AI is core to guiding business automation and innovation. It also requires a culture that prioritizes ethics, transparency, and accountability. While our AI Governance product directly supports this through intake assessments and risk management, our platform approach can help you enable trust by design across your entire business – from internal ethics program management and awareness training to third-party risk management.